Davit Buniatyan, despite his young age, is an extraordinary entrepreneur and scientist in the field of artificial intelligence. This is an inspiring story that starts from looking at how the brain works to actually building one. A journey that begins from Princeton University where he is a Ph.D. candidate and extends to Y Combinator where he co-founded Snark AI.

The world has seen significant expansion in AI during last 5 years. US, China, Canada, France and Germany have been pouring billions of dollars into building AI infrastructure. The success of using Deep Learning in recognizing objects from images had a tremendous impact in the everyday lives of billions. Applications include recognizing our faces, talking to virtual assistants and building self-driving cars.

Terabytes, or even Petabytes, of data is required for getting high-accuracy models that can be deployed in real lives. Huge computational resources are required to execute so called Deep Neural Networks. Deep Neural Network consists of layers of artificial neurons that can be expressed with simple Linear Algebra. Stacking many layers and feeding a lot of data, those models learn complex patterns of information that can help to identify or classify objects in images or even translate speech to text.

The butterfly effect to Princeton University

Davit was born in Yerevan and studied at Quantum College. In 2013, he received an acceptance to University College London to complete his BSc in Computer Science. In 2014, his first step in the AI field was to build a machine learning algorithm to learn news preferences of users with his friends. The project was one of the top 5 from hundreds in a prestigious startup event in London, Techcrunch Disrupt. Subsequently, he and his project were featured on TechCrunch news.

“Newsly Hack Is Tinder For News Articles” https://techcrunch.com/2014/10/19/newsly-hack/

To train Deep Learning models efficiently, researchers often use GPUs, a special piece of hardware that was initially produced for playing games. To train a better model for news recommendation, his friend Karen Hambardzumyan requested a GPU but they couldn’t afford one. Intentionally Davit got enrolled into a 2 day GPU computing course where they were offered a 50% discount after completing it. “My only goal was to get the discount such that we can buy a single GPU and train significantly higher accuracy models with more data” says Davit, but little did he know that during that course he will meet a professor who will introduce him to his future bachelor's thesis supervisor Prof. Lourdes Agapito. Working under Prof. Agapito’s advisory, Davit learned a lot how to conduct research in Computer Science and develop sophisticated AI algorithms from scratch. Prof. Agapito recommended Davit to get accepted into one of the most prestigious Computer Science Ph.D. programs in the world where pioneers of Computer Science and AI like Alan Turing, Claude E. Shannon and Marvin Minsky conducted research.

Being 20 years old, Davit received an offer from Princeton University and was awarded Gordon Wu Fellowship, the most prestigious fellowship for an upcoming Ph.D. student.

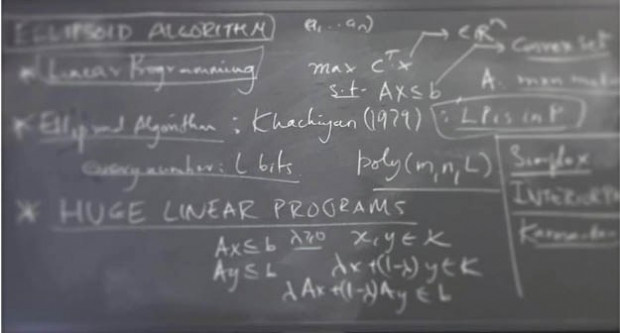

Photo taken at Princeton University 2016 during the class about Linear Programs and Dr. Leonid Khachiyan’s Ellipsoid Algorithms

“One of the very first lessons I learned at Princeton: real Computer Science is done on the blackboard without any computers and with full of heavy math”. During one of his classes, the professor taught about how Soviet Armenian Mathematician Dr. Leonid Khachiyan’s significant theoretical breakthrough struck US news telling “A Soviet Discovery Rocks World of Mathematics” NY Times 1979. Dr. Khachiyan later became a professor at Rutgers University.

Having a conversation with AI

In 2016, Amazon announced Alexa Prize Competition. Princeton team was one of the selected 10 teams that received funding to build a chatbot that can have a generic conversation with human more than 20 minutes. It is somehow similar to Turing test, however the converser is aware that the AI is on the other side of the line. “Analyzing dozen thousands of conversations produced by our bot with random people, I was so happy when I noticed that it had achieved the goal.”. Details of the results were described in the technical paper. Their project was also featured on CNET.

Davit also met Jason Ge, his future co-founder while working on this project and received AWS Machine Learning award next year.

Tracing Neurons

Princeton Prof. Sebastian Seung in his famous Ted talk popularized the term "connectome". To understand how the brain works, looking into a single neuron is not enough. One needs to understand its connections. Upon arrival to Princeton, Davit joined the ambitious project to reconstruct the connectivity of mouse brain led by Prof. Seung and his students at Princeton Neuroscience Institute.

Members of the Seung Lab, from left: Sitting: Alexander Bae, Thomas Macrina, Sergiy Popovych, Jingpeng Wu; Standing: Nicholas Turner, Sven Dorkenwald, Chris Jordan, Davit Buniatyan, Zhen Jia, Sebastian Seung, William Silversmith, Ran Lu, Kisuk Lee, Kyle Luther, Nico Kemnitz, William Wong, Shang Mu, Alyssa Wilson, Maysoun Husseini. Photo courtesy of Janet Jackel source

The mouse brain is cut into very thin slices using a diamond knife (40nm wide). An electron-microscope is used to take images of slices. “We could get thousands of slices each 100,000 pixels wide. Each could be slightly deformed, damaged or totally missed. To reconstruct neurons out of those images we needed to align them first”. Davit worked on the problem of aligning images into a volumetric space such that there are no discontinuities. It is similar to reassembling cut bread. Once the bread is sliced, there is no more ground truth to compare to. A single mistake can propagate into the wrong connectome. It is very important to be error proof from the beginning.

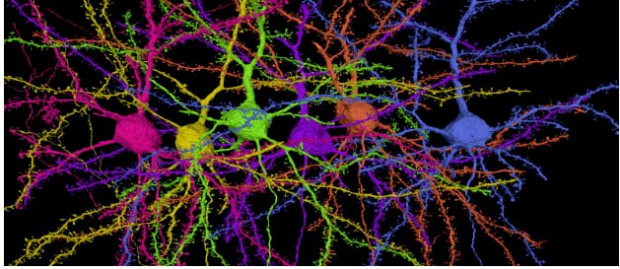

A reconstruction of six pyramidal cells, the most popular type of cell in the cortex. The cells’ dendrites are covered in tiny spines, the site of synaptic connections. Credit: Seung lab

Davit, with his colleagues, advised by Prof. Seung, invented an unsupervised algorithm that uses almost no human supervision to reconstruct a volumetric image of the mouse brain. The algorithm learns itself how to align those slices into each other. It compares two adjacent images and finds the corresponding location. At every iteration, the images are transformed using Deep Neural Networks to make stronger correspondences. Without human intervention, it was able to self-correct errors which are very hard to notice even by a human eye. The described algorithm was accepted for a presentation at CVC conference and will be published in Springer Series "Advances in Intelligent Systems and Computing".

“While I was training the algorithm, occasionally the process crashed. I had one bug in the code that was corrupting it. I looked into this problem for almost 6-7 months and couldn’t figure out what was wrong.” shares Davit from the experience of doing research. “Then I told my advisor about this problem. He directly advised me to describe him the code on the blackboard in mathematical terms (not a pseudo code). After a week of math notations, a problem that was thought to be a software issue (float overflow) was unexpectedly found on the blackboard” - confirming the first lesson that Davit learned during his class at Princeton.

Prof. Seung and his lab uses Artificial Neural Networks to trace how Real Neural Networks are connected. By looking at each individual cell and its connections as a whole, it will help to understand how the brain learns . “I am really lucky to be able to work with Prof. Seung and my labmates” Davit shares from his experience.

Connecting Computational Power

Tiny part of mouse brain contains terabytes, or even petabytes, of data. Running Deep Neural Networks at this scale requires a lot of compute resources. “We were wondering with Jason at NeuroIPS (an AI conference) how we can connect all GPUs into a single network to run Neural Networks”. In order to do that Davit and his PhD colleagues came up with unique algorithm that can run Neural Networks and Cryptomining at the same time, on the same GPU. It helped to incentify all cryptominers to run Neural Networks during Bitcoin boom.

“In contrast to other algorithms that could switch between two executions, our algorithm could actually do both at the same time using same amount of energy”. TechCrunch wrote another article about this invention. Davit and the team got accepted to the top startup accelerator Y Combinator which is known for very low acceptance rate (below 1%).

Jason Ge, Sergiy Popovych, Davit Buniatyan after getting accepted to Y Combinator 2018

“While we were doing research at Princeton, we found out that the ecosystem for deep learning on the cloud is very immature. It was very costly and difficult to train and deploy large-scale deep learning on the cloud. That inspired us to start a company to solve the problem.” Jason Ge, PhD at Princeton and co-founder at Snark AI

Snark AI builds a deep learning platform on the cloud. It helps companies to scale their AI algorithms on the cloud. For example processing 100 million documents or millions of images can be easily achieved using their software on top of any cloud provider. Davit mentioned that “Companies can significantly speed-up the research cycle from training to deploying ML models”

Snark AI attracted investment of 1.7 million dollars. Investors include Y Combinator, Fenox Ventures, Tribe Capital, Baidu VC, Liquid 2 and many prominent angels from Silicon Valley. They also received investment from Armenian investors including SmartGate VC.

Next steps

While back ago he couldn’t afford one GPU, now his software controls more than tens of thousands of GPUs in a single network to train and deploy deep learning models.

It is expected that mapping the whole human brain will be only feasible in 50 years. Davit has the ambition to speed up this process. He has also planned to open an applied ML lab in Armenia.

Jensen Huang, CEO and Founder of NVidia

Largest GPU Producer